In this article, we will explain how to resolve the service ‘Spark UI’ could not bind on port 4054. Attempting port 4055 in the Big Data environment.

Error: Spark UI’ could not bind on port 4054

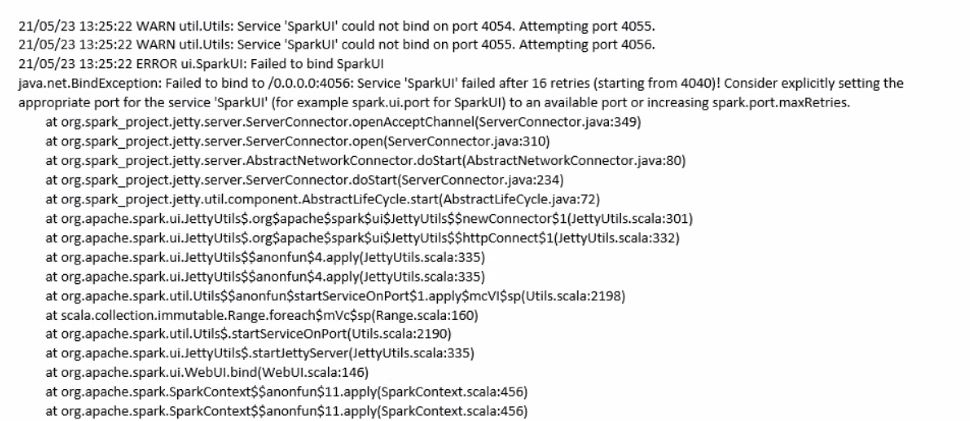

WARN util.Utils: Service 'SparkUI' could not bind on port 4054. Attempting port 4055. WARN util.Utils: Service 'SparkUI' could not bind on port 4054. Attempting port 4056. ERROR ui.SparkUI: Failed to bind SparkUI java.net.BindException: Failed to bind to /0.0.0.0:4056: Service 'SparkUI' failed after 16 retries (starting from 4040)! Consider explicitly setting the approriate port for the serivce 'SparkUI' ( for example.spark.ui.port for SparkUI) to an avaialble port or increasing spark.port.maxRetries. at org.spark_project.jetty.server.ServerConnector.openAcceptChannel(ServerConnector.java:349) at org.spark_project.jetty.server.ServerConnector.open(ServerConnector.java:310) at org.spark_project.jetty.server.AbstractNetworkConnector.doStart(AbstractNEtworkConnector.java:80) at org.spark_project.jetty.server.ServerConnector.doStart(ServerConnector . java:234)

Solution:

The above issue is very simple to resolve for the Big Data professionals. For Spark by default port number is 4040. Here just we need to change Spark port from 4040 to 4041. How to change Spark port using Spark shell command.

[user ~] $ spark-shell --conf spark.ui.port =4041

Basically, Spark have consecutive ports like 4040, 4041, 4042, 4043 and etc.

In Cloudera or Hortonwork distributions we need to configure in spark-site .xml file. Coming to MapR distributions need to configure same like Cloudera distributions.