Nowadays Spark and Hive integration are the most used components in Bigdata Analytics.

Getting an error while reading the hive table using Spark. Caused by: NoSuchTableException: Table or view ’emp’ not found in database ‘myhive’;

Hive version: 1.2

Spark version: 2.X.X

Scala Version: 2.X.X

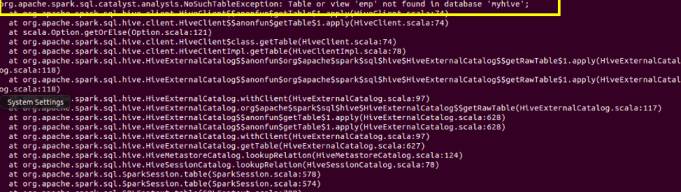

Error:

org.apache.spark.sql.catalyst.analysis.NoSuchTableException: Table or view 'emp' not found in database 'myhive'; at org.apache.spark.sql.hive.client.HiveClient$$anonfun$getTable$1.apply(HiveClient.scala:174) at org.apache.spark.sql.hive.client.HiveClient$$anonfun$getTable$1.apply(HiveClient.scala:174) at scala.Option.getOrElse(Option.scala:321) at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:74) at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:78) atorg.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$org$apache$spark$sql$hive$HiveExternalCatalog$$getRawTable$1.apply(HiveExternalCatalog.scala:218) atorg.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$org$apache$spark$sql$hive$HiveExternalCatalog$$getRawTable$1.apply(HiveExternalCatalog.scala:218) Caused by : NoSuchTableException: Table or view 'emp' not found in database 'myhive'; at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:348) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:898) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:907) at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:727) at org.apache.spark.sql.hive.HiveMetastoreCatalog.lookupRelation(HiveMetastoreCatalog.scala:324) at org.apache.spark.sql.hive.HiveSessionCatalog.lookupRelation(HiveSessionCatalog.scala:170) at org.apache.spark.sql.SparkSession.table(SparkSession.scala:878) at org.apache.spark.sql.SparkSession.table(SparkSession.scala:174)

Solution:

The above error belongs to miscommunication between Spark and Hive so here we provide configuration between Spark and Hive xml files simply.

Here is the simplest solution, it is working for me. Basically it is integration between Hive and Spark, configuration files of Hive ( $ HIVE_HOME /conf / hive-site.xml) have to be copied to Spark Conf and also core-site . xml , hdfs – site.xml has to be copied.

Summary: In Spark and Hive interactions bit of concentration while copying Hive configuration files into Spark configuration files also vice versa. And copy core, hdfs xml files also in Spark location.