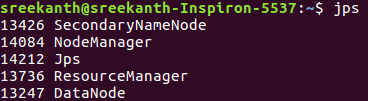

I have installed Hadoop on a single node cluster and started all daemons by using start-all.sh, command after that accessing the Namenode (localhost:50070) it does not work. Then I checked “jps” it showing like below:

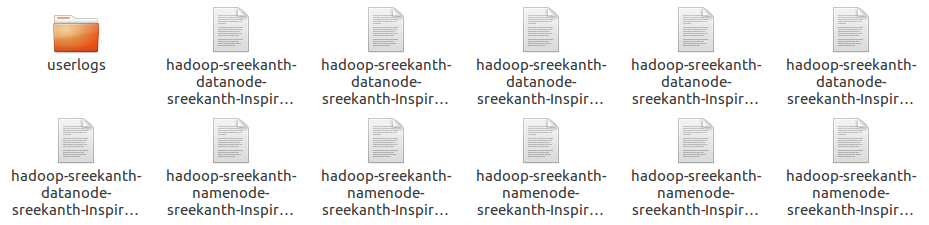

After that, I checked Hadoop Namenode log files in Hadoop cluster:

How to check Hadoop log files in a Hadoop cluster.

Like the below snapshot showing all Hadoop log files. For example Namenode, Datanode, and secondary Namenode log files.

Go to //home/sreekanth/Hadoop/hadoop-2.6.0/logs

After checking Namenode log files it showing error Java.io.IOException: NameNode is not formatted. Here is a fully Namnode log file in a single node Hadoop cluster.

2019-12-14 12:35:54,367 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /home/sreekanth/data/dfs/name/in_use.lock acquired by nodename 13083@sreekanth-Inspiron-5537 2019-12-14 12:35:54,370 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Encountered exception loading fsimage java.io.IOException: NameNode is not formatted. at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:212) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1020) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:739) at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:536) at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:595) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:762) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:746) at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1438) at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1504) 2019-12-14 12:35:54,375 INFO org.mortbay.log: Stopped [email protected]:50070 2019-12-14 12:35:54,477 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping NameNode metrics system... 2019-12-14 12:35:54,478 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system stopped. 2019-12-14 12:35:54,478 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system shutdown complete. 2019-12-14 12:35:54,478 FATAL org.apache.hadoop.hdfs.server.namenode.NameNode: Failed to start namenode. java.io.IOException: NameNode is not formatted. at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:212) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1020) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:739) at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:536) at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:595) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:762) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:746) at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1438) at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1504) 2019-12-14 12:35:54,479 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1 2019-12-14 12:35:54,486 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at sreekanth-Inspiron-5537/127.0.1.1 ************************************************************/

How to resolve the error Java.io.IOException: NameNode is not formatted with simple steps:

Step 1: First stop all Hadoop daemons using below command:

stop-all.sh

Step 2: After that edit the Hadoop hdfs-site.xml file like below code with exact path and configurations.

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/sreekanth/data/dfs/name</value> <final>true</final> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/sreekanth/data/dfs/data</value> <final>true</final> </property> </configuration>

Step3: Once the configuration is done then start all daemons using below command:

start-all.sh

After completion of all steps please verify all services are working or not. If still showing the same error. Please follow the below resolution.

Step 1: Please set exact paths and configurations in hdfs-site.xml file.

Step 2: After that format the Namenode in the terminal by using below command:

hadoop namenode -format

Step 3: Then check the Namenode log files. In that log file, any error then again stops the services then start again the daemons.

Step 4: Once the above steps are completed then start all services.

What is Namenode?

In the Hadoop eco-system, Namenode is a major role in metadata storage that’s why it is called a master node in a Hadoop cluster. It maintains all data nodes(slave nodes).

Summary: In a single-node Hadoop cluster without Namenode there is no cluster installation properly. So here we provided resolutions for how to start the Namenode after getting errors with pictures for Hadoop Admins and developers. How to check log files in the Hadoop eco-system with a simple path in the above-mentioned pictures.